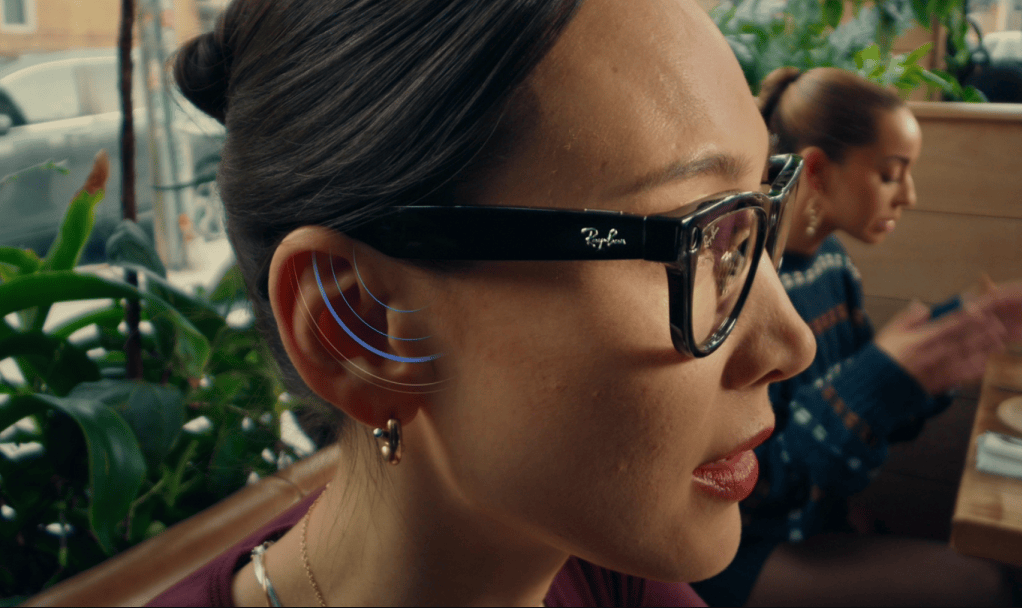

Meta’s smart glasses get AI feature that focuses on human conversation in noisy places

With this update, Meta is shifting the narrative around smart glasses away from content capture and toward everyday utility.

Meta is repositioning its smart glasses as tools for real-world interaction after rolling out a new update that prioritises clearer, more natural conversations.

The software upgrade introduces a feature called Conversation Focus, which uses artificial intelligence and directional audio to amplify nearby voices while reducing background noise, allowing wearers to hear people more clearly in busy environments.

More To Read

- WhatsApp introduces ads in status updates and channels, private chats stay secure

- Kenya’s insurance industry fast-tracks claims with AI adoption

- Google launches Workspace Studio, enabling anyone to build Gemini-powered AI agents

- Gulf region paves the way to become digital data hub

- Rights experts sound alarm on AI’s potential to target activists, undermine electoral integrity

- Meta to deduct 5 per cent tax on Kenyan creators’ earnings in 2026

The feature relies on the glasses’ built-in microphones and open-ear speakers to detect where a conversation is coming from and selectively boost speech in that direction.

Instead of blocking out surrounding sounds, the system is designed to preserve awareness of the environment while making dialogue easier to follow in places such as cafes, crowded streets or social gatherings.

Users can control the level of voice amplification through touch gestures or the companion app.

With this update, Meta is shifting the narrative around smart glasses away from content capture and toward everyday utility.

Earlier versions of the glasses focused largely on taking photos, recording video and interacting with Meta’s AI assistant.

Conversation Focus marks a move into assistive audio, positioning the glasses as a lightweight alternative to earbuds or hearing-aid-style devices for people who struggle with noise-heavy settings.

By enhancing how people hear and communicate, the company is betting that smart glasses can become socially acceptable, always-on companions rather than niche gadgets.

The update is currently reaching early users, with wider availability expected in the coming months.

In addition, the glasses are getting another update that lets you use Spotify to play a song that matches what’s in your current view.

But how well the feature works, of course, will still need to be tested. The feature will initially become available on Ray-Ban Meta and Oakley Meta HSTN smart glasses in the US and Canada before being rolled out to users globally.

However, the idea of using smart accessories as tools to help with hearing is not limited to Meta, as Apple is also slowly adapting some of the features in its AirPods Pro models.

Top Stories Today